Algorithmic Bias, Personalization, and E-commerce: Ethical Challenges and Emerging Business Patterns思维导图

U396976854

2025-04-25

算法和电子商务系统中的伦理挑战

算法和电子商务系统中的伦理挑战

算法偏差、个性化和电子商务:伦理挑战和新兴商业模式内容详述

树图思维导图提供《Algorithmic Bias, Personalization, and E-commerce: Ethical Challenges and Emerging Business Patterns》在线思维导图免费制作,点击“编辑”按钮,可对《Algorithmic Bias, Personalization, and E-commerce: Ethical Challenges and Emerging Business Patterns》进行在线思维导图编辑,本思维导图属于思维导图模板主题,文件编号是:44f444e72a9cc2ce281f25f4ed3320d1

思维导图大纲

相关思维导图模版

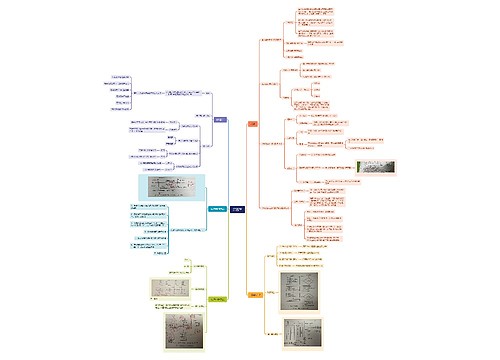

Algorithmic Bias, Personalization, and E-commerce: Ethical Challenges and Emerging Business Patterns思维导图模板大纲

1. Introduction

<a id="OLE_LINK3"></a>With the advent of the digital era, algorithms and big data technologies have penetrated into social life, providing users with accurate and personalised services while enhancing platform efficiency and user experience. But deviations in the current algorithm design may trigger fairness disputes, user discrimination and privacy risks, posing serious ethical challenges.

Enterprises need to balance personalisation with ethical norms when utilising algorithms, reduce negative impacts through transparent and inclusive design principles, and ensure long-term sustainability. Moreover, the rapid development of e-commerce, especially the data-driven decision-making model, has exposed the risk of implicit manipulation of user behaviour by algorithmic bias, exacerbating the ethical dilemmas that exist.

This paper will focus on algorithmic bias, behavioural targeting and e-commerce models, analyse their social impacts, and propose optimisation solutions, in the hope of promoting a more equitable and sustainable development of the digital economy..

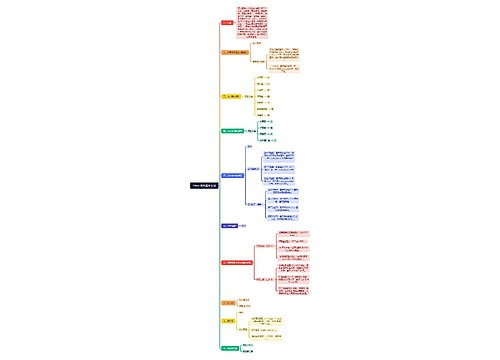

2. Algorithmic Bias and Personalization

2.1 Impact of Algorithmic Bias

Algorithmic bias refers to the biased or unfair output results of an AI system due to flawed training data, incomplete assumptions, or limitations in the design itself. This bias not only affects the accuracy and reliability of AI output, but also undermines fairness, inclusiveness, and user trust. Problems that have been identified in the course of people's use include phenomena such as recruitment algorithms that favour specific genders or races, and financial credit scoring systems that discriminate against low-income groups. When analysed in principle, the core problem with algorithmic bias is that it amplifies historical biases already present in the training data, thus potentially exacerbating the phenomenon of subjective evaluations of social inequality.

Generative AI models reflect many of these problems. For example, the representative chatgpt, while ChatGPT claims not to store user data or retain personal information, it admits that dialogue data is stored on OpenAI's servers or the servers of a cloud service provider. This data may be used in future iterations of the model, which could thereby raise privacy risks. Because AI models are trained based on large-scale datasets from the Internet, they inevitably contain personal information that users have not consented to. This increases the risk that some sensitive information will reappear in AI-generated responses. For example, through deliberate prompting and manipulation, it may lead to models inadvertently generating responses that contain private data, which write can lead to personal information west building. One representative incident, namely the unintentional disclosure of company secrets by a Samsung employee while using ChatGPT, highlights the limitations of AI in protecting confidential information.

The main sources of algorithmic bias are biases in training data and problems in algorithm design. Many training data reflect inherent inequalities in historical societies, such as differences in gender, race, or economic status, and this information may be replicated or even amplified in model learning. At the same time, limitations in parameter selection and assumptions in the design of these algorithms can introduce biases, especially when models are more inclined to cater for mainstream trends and may neglect to pay attention to the needs of minority groups.

These biases can have unfair consequences in practical applications. For example, recruitment algorithms may exclude candidates from specific groups, and financial systems may set higher thresholds for low-income groups. And on a societal level, AI applications in law enforcement and healthcare could have an even greater negative impact on vulnerable groups. And large-scale data mining and surveillance technologies have also triggered privacy protection and trust crises. Despite the limited direct correlation between algorithmic bias and the environment, the energy-intensive nature of AI and the rare-earth resources on which the production of hardware depends still poses a long-term threat to the ecosystem, and this paper believes that the complexity of these issues will require a concerted effort in both technological design and regulation to address.

In order to address this issue a multifaceted approach is needed from data, technology and ethics. Optimising data quality to reduce bias, improving the transparency and interpretability of algorithms, and making the decision-making process of AI more open and traceable. People can improve the regulatory system through policies and laws to promote AI to find a balance between fairness and efficiency. It is also only through multi-party collaboration that the impact of algorithmic bias can be effectively mitigated, so that AI can truly serve society and promote fair and sustainable development.

2.2 Benefits of Personalization

Relying on AI technologies such as deep learning, personalised recommendation systems achieve accurate recommendations by analysing user behavioural data, which can not only improve conversion rates but also enhance user loyalty. The application of generative AI and large language models makes recommendations smarter, such as in Meta's Reels and Instagram, where AI can increase user engagement and content exposure.

Meanwhile, the recommendation system significantly optimises business efficiency.AI mines user behaviour data to help companies gain precise insights into demand and can quickly adjust resource allocation. Optimising advertising through AI not only improves ROI, but also can to a certain extent the efficiency of budget use, creating higher value for the enterprise.

Recommender systems will become more intelligent and personalised in the foreseeable future. As these systems become more intelligent, companies that embrace these innovations will be in a better position to enhance their competitiveness and expand their market share. In the Chinese market, the adoption of personalised recommendation technologies is still evolving, and it is now expected that more enterprises will adopt these systems to meet the growing needs of their users.

3. Data Collection Mechanisms for Behavioral Targeting

Behavioural targeting enables personalised content push and customer segmentation through systematic collection of user data. According to my data analysis, the main data collection methods include small file cookies stored on the user's device to track user browsing activities, invisible tracker web beacons embedded in websites or emails to monitor user behaviour, device fingerprinting to enable cross-platform tracking by identifying unique characteristics of the device, and social media platforms such as Facebook and Instagram collect data on user behaviour, preferences and interactions. The data collected is analysed to identify patterns of user behaviour based on criteria such as demographics, interest preferences, purchase intent and geolocation information. To ensure ethical use of data, companies need to continuously monitor and communicate with stakeholders, as well as commit to transparency and ethical compliance to maintain user trust and achieve sustainable growth.

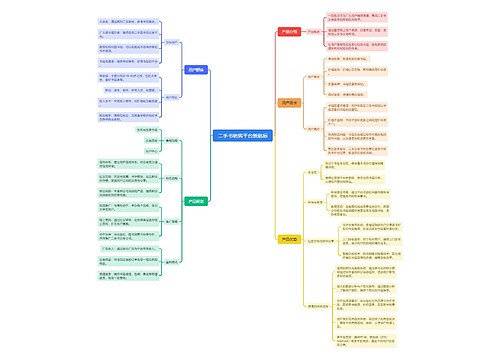

4. The Role of E-commerce in Modern Business

E-commerce has become an integral part of modern business and is divided into three main types: business-to-consumer (B2C), where companies interact directly with consumers through platforms such as H&M; business-to-business (B2B), which involves business-to-business transactions such as the provision of software solutions by Microsoft to organisations; and consumer-to-consumer (C2C), which facilitates transactions between individuals through platforms such as eBay. E-commerce models are diverse and each has its own role. Retailers such as Amazon sell goods directly; transaction agents such as Expedia streamline processes and increase efficiency; eBay connects buyers and sellers and facilitates transactions; streaming services act as content providers and are able to distribute high-quality intellectual property; and community platforms such as Facebook facilitate social interactions, while portals such as Yahoo consolidate a wide range of services. While these transaction agents are known for streamlining processes efficiently, content providers can play an important role by facilitating users' access to high-quality content.

5. Digital Goods and Markets

<a id="OLE

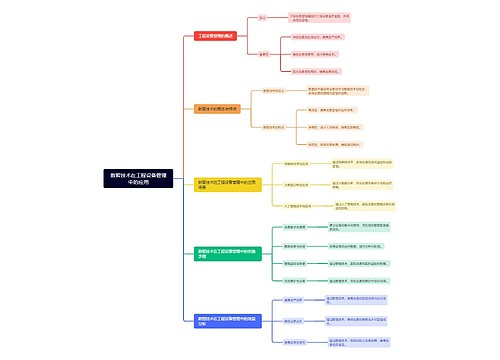

6. Ethical Challenges in Algorithmic and E-commerce Systems

With the widespread use of personalised AI systems, businesses face a number of ethical challenges. For example, algorithmic bias may lead to the marginalisation of specific groups, exacerbating social inequality to a certain extent; the opacity of algorithms makes it difficult for users to understand the recommendation logic, weakening trust; and these reliances on personal data also pose risks of privacy and information misuse.

To find a balance between personalisation and ethics, companies can prioritise fairness and inclusion. Incorporating fairness testing in model development, such as developing better explainable AI to make algorithms more transparent, while people need to conduct regular bias reviews to identify and resolve issues in a timely manner. Through these measures, not only can user trust be enhanced, but also enterprises can be driven to achieve sustainable development.

At the same time, OpenAI has raised warnings about some of GPT-4's capabilities, such as its tendency to plan long-term goals, accumulate resources and human-like thinking, which has sparked global concerns about AIGC's technological risks, all of which are believed to drive the introduction of stricter regulation and accountability mechanisms.In China, the State Internet Information Office has drafted Measures for the Administration of Generative Artificial Intelligence Services (Draft for Public Comments), which requires that generative AI content must reflect socialist core values, avoid generating false information, and protect intellectual property rights. In the U.S., the Department of Commerce is seeking public comment on accountability measures for AI technologies, including whether to certify new AI models before release to control their potential risks. In Italy, the Personal Data Protection Authority imposed an injunction on ChatGPT on 31 March, restricting OpenAI's processing of Italian user data and launching an investigation. And, Germany's data commissioner has also expressed concerns about data security and hinted at a possible ban on OpenAI's chatbot.

To address these ethical concerns, ethical, legal and technological approaches can be approached. Ethical approaches include the development of AI systems embedded with ethical reasoning and decision-making capabilities, using top-down, bottom-up or hybrid approaches. The legal approach aims to regulate the development, deployment and application of AI through legislation, and governments and organisations are increasingly establishing laws to address AI-related ethical issues. Technological means, on the other hand, focus on the development of new technologies, especially in the field of machine learning, to mitigate the shortcomings and ethical risks of current AI, and one can study interpretable AI to ensure transparency and fairness in the decision-making process and to reduce bias or discrimination.

7. Conclusion

Algorithmic bias and personalisation play a crucial role in shaping modern business systems. Personalisation can enhance user experience and business efficiency, but if left unchecked, algorithmic bias can undermine fairness, inclusivity and trust. Therefore, businesses need to adopt transparent and inclusive AI practices to mitigate these risks. Meanwhile, the rise of e-commerce and digital marketplaces has considerably upgraded traditional business models, offering new opportunities for businesses and consumers by introducing greater efficiency and flexibility. Behavioural targeting and segmentation, as a key means of enabling effective personalisation, must be accompanied by ongoing monitoring and ethical oversight to ensure they meet fairness and ethical standards.

Reflect on the feedback

In our supplementary research, we further expanded on the concepts of personalized recommendation and algorithmic discrimination, discussing their advantages and disadvantages while providing more examples. At the same time, we conducted a detailed breakdown of the principles behind personalized recommendations and algorithmic discrimination, aiming to integrate them more effectively with information systems theory.

We conducted a comprehensive analysis of various operational scenarios in e-commerce and examined the potential algorithmic discrimination issues underlying these processes. Finally, to enhance the depth of our research, we reviewed relevant literature. Based on the findings from the literature and our critical reflections, we proposed a series of methods to address the ethical issues arising from personalized algorithmic recommendations.

We concluded our task with a summary and provided a list of references at the end.

Reference

[1] Marcus-Newhall A , Miller N , Holtz R ,et al.Cross‐cutting category membership with role assignment: A means of reducing intergroup bias[J].British Journal of Social Psychology, 1993, 32(2):but included the insertion of procedures likely to increase negative task attitudes and the opportunity for personalization.DOI:10.1111/j.2044-8309.1993.tb00991.x.

[2] Aishwarya G A G , Su H K , Kuo W K .Personalized E-commerce: Enhancing Customer Experience through Machine Learning-driven Personalization[C]//2024 IEEE International Conference on Information Technology, Electronics and Intelligent Communication Systems (ICITEICS).0[2024-12-15].DOI:10.1109/ICITEICS61368.2024.10624901.

[3] Ascarza E , Israeli A .Eliminating unintended bias in personalized policies using Bias Eliminating Adapted Trees (BEAT)[J].Social Science Electronic Publishing[2024-12-15].DOI:10.2139/ssrn.3908088.

[4]Marie Lefèvre, Guin N ,Stéphanie Jean-Daubias.A Generic Approach for Assisting Teachers During Personalization of Learners' Activities[C]//International Conference on User Modeling, Adaptation, and Personalization.2012.

[5] Ascarza E , Israeli A .Eliminating unintended bias in personalized policies using bias-eliminating adapted trees (BEAT)[J].Proceedings of the National Academy of Sciences of the United States of America, 2022, 119(11):e2115293119.DOI:10.1073/pnas.2115293119.

[6] Otterbacher J .Computer Vision, Human Likeness, and Problematic Behaviors: Distinguishing Stereotypes from Social Norms[J].Adjunct Proceedings of the 31st ACM Conference on User Modeling, Adaptation and Personalization, 2023.DOI:10.1145/3563359.3597381.

[7] Eversdijk M , Habibovi M , Willems D L ,et al.Ethics of Wearable-Based Out-of-Hospital Cardiac Arrest Detection[J].Circulation: Arrhythmia and Electrophysiology, 2024, 17(9):e012913-1827.DOI:10.1161/CIRCEP.124.012913.

[8] Marici M , Runcan R , Cheia G ,et al.The impact of coercive and assertive communication styles on children's perception of chores: an experimental investigation[J].Frontiers in Psychology, 2024.DOI:10.3389/fpsyg.2024.1266417.

[9] Rahman M A , Hossain M S .Artificial Intelligence in Journalism: A Comprehensive Review[J]. 2024.

[10]Riku Klén.Leveraging Artificial Intelligence to Optimize Transcranial Direct Current Stimulation for Long COVID Management: A Forward-Looking Perspective[J].Brain Sciences, 2024, 14.DOI:10.3390/brainsci14080831.

[11] Carson A B .Public Discourse in the Age of Personalization: Psychological Explanations and Political Implications of Search Engine Bias and the Filter Bubble[J]. 2015.

[12] Celis L E , Kapoor S , Salehi F ,et al.An Algorithmic Framework to Control Bias in Bandit-based Personalization[J]. 2018.DOI:10.48550/arXiv.1802.08674.

[13] Bozdag E .Bias in algorithmic filtering and personalization[J].Ethics and Information Technology, 2013, 15(3):209-227.DOI:10.1007/s10676-013-9321-6.

[14]Engin,Bozdag.Bias in algorithmic filtering and personalization[J].Ethics&Information Technology, 2013.DOI:10.1007/s10676-013-9321-6.

查看更多

Business Meeting思维脑图思维导图

U362460078

U362460078树图思维导图提供《Business Meeting思维脑图》在线思维导图免费制作,点击“编辑”按钮,可对《Business Meeting思维脑图》进行在线思维导图编辑,本思维导图属于思维导图模板主题,文件编号是:ccd82728603dbb40ed7390026826678d

Commerce (Business)思维导图

U950971800

U950971800树图思维导图提供《Commerce (Business)》在线思维导图免费制作,点击“编辑”按钮,可对《Commerce (Business)》进行在线思维导图编辑,本思维导图属于思维导图模板主题,文件编号是:a70b9cff06b5b39925f00d8f6b43d45a

相似思维导图模版

首页

我的文件

我的团队

个人中心